Back to all posts

Pass your codebase to AI with Foldup

January 29th, 2025

7 min read

Coding

AI

LLMs

AI has been taking over the world lately. Practically every industry has been impacted in ways that would probably have been thought impossible just a few years ago. This is particularly true when it comes to AI code generation.

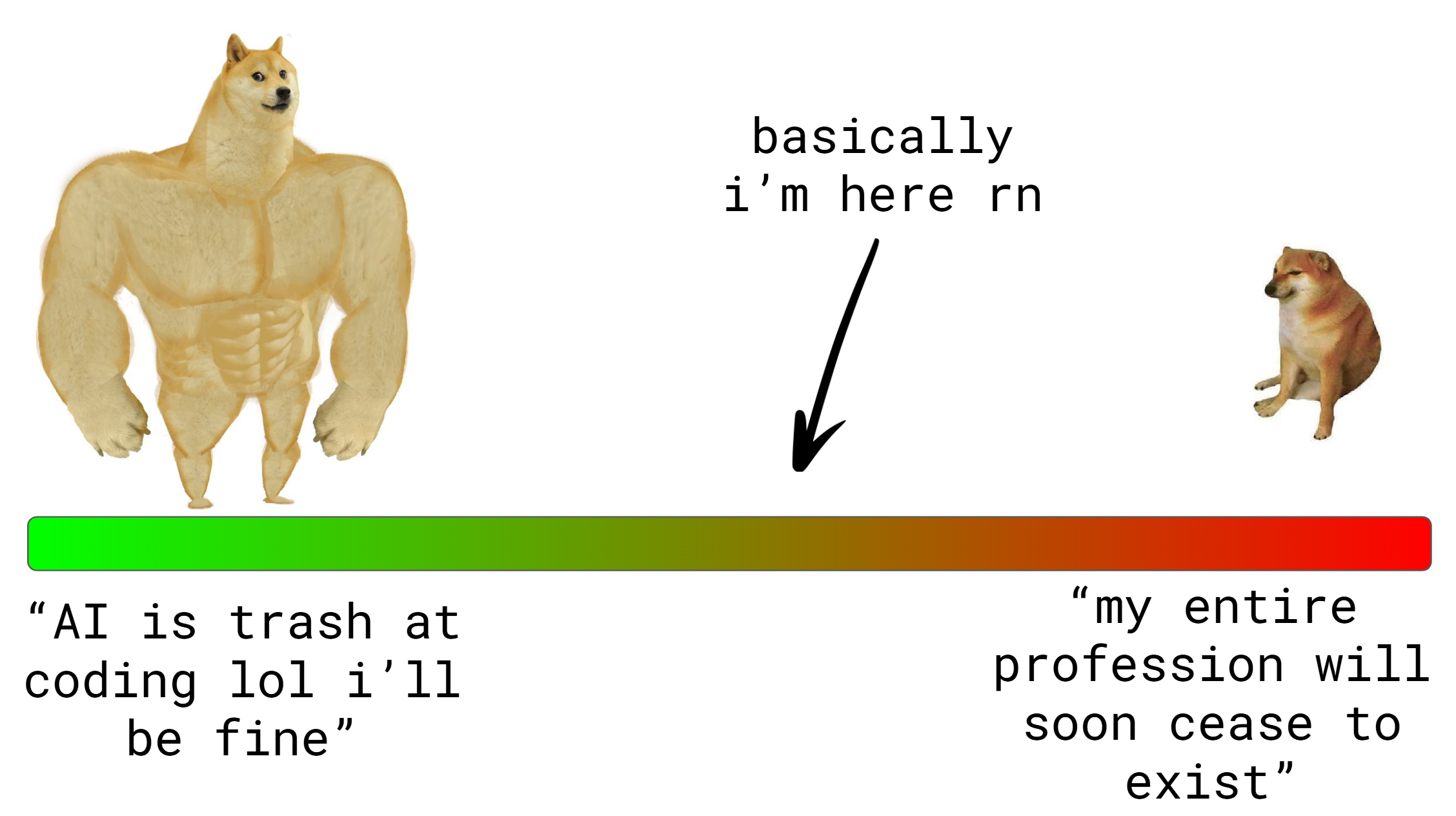

When I first used ChatGPT to write code back in late 2022 (when it came out), I can't say I was at all surprised when it wrote me complete garbage code lol. My expectations were low and they were perfectly met. Then over time, these LLM systems started to evolve and we began to see insane results; we even got entire companies built on the concept of an "AI programmer". Safe to say my outlook quickly changed to one of defeat lmao — I just thought "well, that's my job cooked then" and started to consider what my new career path would be (chef?? musician??). But now, having begun to utilise these tools more and more in my day-to-day, I think I've sort of settled somewhere in the middle of "it's so over" and "we're so back".

I've accepted that eventually, probably inevitably, these LLMs will reach a point where the requirement for human interaction is minimal (or even zero?). At the same time though, I'm beginning to realise we aren't quite at that point yet. Still some time left before my boss can just fire me and spin up a NathanGPT LLM to do my job instead. And in fact, I'm beginning to believe more and more that those of us who manage to integrate these tools to accelerate our work will probably be the last to be replaced by them. So, that's what I'm trying to do.

So, how do I code with AI?

Glad you asked! To begin with, I still think the most powerful way to do this is to use Cursor, probably the most popular AI code editor right now. It's forked from VSCode (I think lol), so the feel is very similar to what you're probably already used to. With Cursor, the LLMs they use will already have access to your entire codebase right out of the box.

However, I've found that this isn't always the best approach. This Cursor approach works great for what I like to call "action tasks". Things that require the LLM to actually write code for you, like adding new features to an existing codebase or churning out unit tests for a new function you just wrote. But as programmers, there is so much more to the job than just writing code.

"Orchestration tasks"

What if you want to ask an LLM about (and, crucially, ideate on) the system architecture for a new feature? Or the best way to integrate an external service into your code? Or the pros and cons of a specific refactor you had in mind? These are things I like to call "orchestration tasks". Things that don't require the LLM to actually write any code, but instead require the LLM to "think" and provide opinions and tradeoffs and so on. I've found that these orchestration tasks are much better executed by just hopping into a chat UI window (something like Claude, ChatGPT or DeepSeek). That way:

- Your chats are SAVED. This is crucial for orchestration tasks. Cursor tries to do this but my chats still somehow disappear all the time.

- On top of that, the web-based nature of these chat UIs means every single chat has a unique URL. You can save them and reference them in your own notes very easily. This has been a game changer for me.

- You don't have to worry about Cursor trying to make edits to your code when you only wanted its thoughts.

- You can utilise the "projects" feature of these chat UIs to keep your thoughts organised for a specific project.

Now this all makes sense, but there's still a problem. How do you give these chat UI LLMs the context of your codebase?

Enter Foldup

You guessed it chat, this is the entire point of the post lol. Foldup is an open-source CLI tool I made that allows you to "fold" your codebase up into a single Markdown file, which can then easily be passed to any LLM or chat UI.

Now, this certainly isn't the first of its kind. I actually got the inspiration from an NPM package called ai-digest. But then one day that package literally completely disappeared off the face of the earth lmao. And then whilst I was researching some possible alternatives, I realised "wait a minute... I could literally just....... build this myself ??"

Sooo I did! It's fully open-source (here's the repo if you want to check it out) and available on PyPI as well. You can get started with a simple pip install:

And then you can just run it from your project directory:

And that's it! Details for all the options and features are on the repo.

Btw, please be careful

I wanted to add this disclaimer because yes, AI is beginning to feel like this super cracked genius dev that knows everything about every piece of code in existence. And it undoubtedly has accelerated my own development workflow significantly. But please don't be fooled, it's still REALLY STUPID sometimes. Hallucinations still exist and are still pretty common. I can't count the number of times Claude has given me code or advice that just makes zero sense in the context of the codebase, or sometimes would even have been harmful to the project if I had just blindly used it.

Honestly even if everything works perfectly, you're still just shooting yourself in the foot if you don't understand the code the LLM is giving you. You'll struggle to maintain the code, you'll end up in these loops of just begging the LLM to fix its own errors, and worst of all you'll never learn anything.

So, NEVER COMMIT CODE THAT YOU DON'T UNDERSTAND. Ask the LLM to explain more, ask it to write documentation, ask it to simplify things. Use it as not just a tool to accelerate your work, but as a tool to help you learn. I think this is the most powerful way to use these tools.

Final thoughts

Anyways, that's all I've got for now. I've been loving tinkering with LLMs and working with Foldup lately, and I'm sure I'll be using it more and more in the future. Let me know if you check it out! And if you'd like to talk more about LLM-assisted coding (or coding/LLMs/tech in general), please feel free to reach out (:

Happy coding!

— Nathan